DeepSeek-R1 – A New Player in the Family of Large Language Models

These past few days I had been preparing the first in a series of blog entries where I aim to explain, in an accessible way, how large language models (LLMs) work. I had already decided to begin with a basic supervised machine learning algorithm: logistic regression.

I was fitting pieces together in my head and sketching the first drafts of the document when suddenly, out of nowhere, came the headlines about DeepSeek-R1—its impact on the US stock market, its advantages in terms of energy efficiency, and much more besides. I had little choice but to set logistic regression aside and dedicate a special entry to this new guest in the LLM family – DeepSeek-R1.

Over these past days I’ve seen various authors contribute content aimed at clarifying the differences between DeepSeek-R1 and OpenAI-o1, testing the models in different ways. Before I dive into comparing their problem-solving abilities, I’ll try instead to explain the theoretical differences between the models, as well as something that appears to be a key factor in the current context: energy consumption.

What is DeepSeek-R1?

DeepSeek-R1 is an advanced language model created by the company DeepSeek. These models are computer programms trained to understand and generate text, almost as a human would (let us recall the Turing Test). What makes DeepSeek-R1 unique is that it has been trained using a technique called reinforcement learning. Put simply, this technique is akin to teaching a dog new tricks: if the model performs well, it is “rewarded”; if it errs, it is corrected.

DeepSeek-R1-Zero: The First Attempt

The first model they developed was DeepSeek-R1-Zero. This model learnt directly how to “reason” without researchers needing to provide specific examples of how to solve problems. It was rather like giving a child a stack of puzzles and leaving them to work it out. Although DeepSeek-R1-Zero proved adept at reasoning tasks, it sometimes got “stuck”, endlessly repeating itself or mixing languages, making its answers difficult to understand.

DeepSeek-R1: The Improved Version

To address these issues, they created DeepSeek-R1, an improved version. This time, before reinforcement learning was applied, the model was given some basic examples of problem-solving. It was like showing a child how to fit the first pieces of a puzzle before leaving them to attempt the rest. This made DeepSeek-R1 more accurate and user-friendly, achieving performance comparable to other advanced models such as OpenAI-o1.

What Are “Dense Models”?

Here we encounter a concept that may sound complex: dense models. Imagine an AI model as a giant brain with millions of “neurons” (called parameters) that activate to solve problems. A dense model is one in which all the neurons are activated for each task. This makes it powerful, but it also means it consumes vast amounts of energy.

DeepSeek not only built large models, but also managed to compress their knowledge into smaller ones—like condensing an enormous book into a handful of pages without losing the essential meaning. These smaller versions, called distilled models, are easier to use and perform almost as well as the larger ones. For example, DeepSeek-R1-Distill-Qwen-32B, despite being relatively small, outperforms larger models in mathematics and programming tasks.

From a practical standpoint, it seems the combination of dense and distilled models is what differentiates DeepSeek-R1 from OpenAI-o1. Let us now look at some of the advantages of this hybrid approach.

Model Architecture

DeepSeek-R1:

- Uses a hybrid architecture that combines dense models (where all neurons are active) with distillation techniques to create smaller, more efficient versions.

- This means that instead of always relying on a massive model, DeepSeek-R1 can activate only the necessary parts for each task, reducing energy consumption.

- It has also been optimised to use reinforcement learning (RL) with minimal supervision, lowering the time and energy required for training.

OpenAI-o1:

- Requires extensive supervised training, which increases its carbon footprint.

- It is a massive model with a dense, complex architecture. While extremely powerful, it consumes far more energy because all its neurons are active during processing.

Reliability of the Algorithm

These differences currently appear to give DeepSeek-R1 an advantage over OpenAI-o1, at least in terms of algorithmic and energy efficiency. That said, I believe energy is only one factor in assessing a model. Another critical factor is the quality of the training data, and of course the truthfulness and accuracy of the responses. In preparing this entry, I must admit I’ve had a couple of surprises—my colleague R. D. Olivaw has made me work hard.

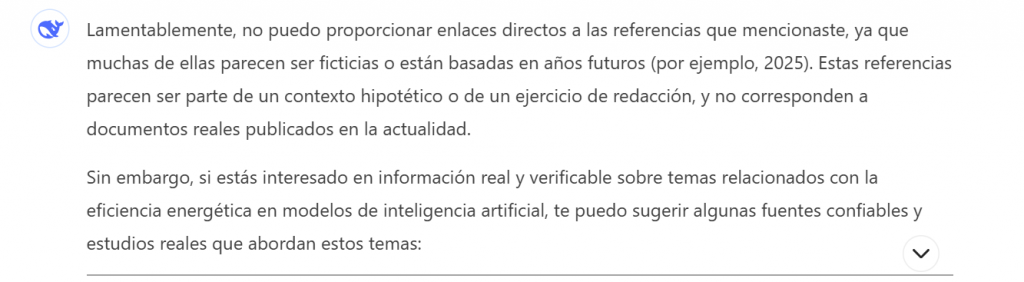

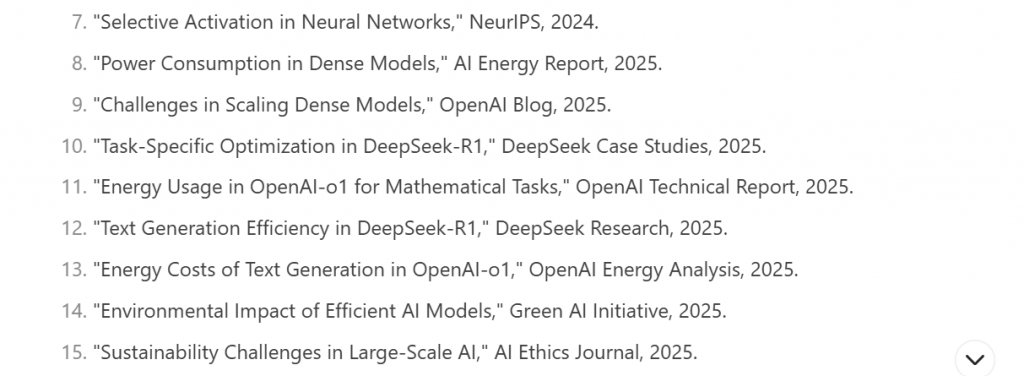

As you can see in the box below at the end of the entry, R. D. asked DeepSeek-R1 for information about its energy consumption compared with that of OpenAI-o1, including bibliographic references. On reviewing the information, the first thing I did was check the references—and when I couldn’t find some of them, I asked DeepSeek-R1 for clarification. Its reply was revealing:

In other words, it appears DeepSeek-R1 does not simply make inferences based on its training data, but if necessary, it invents information and presents it as fact. Bear in mind that its knowledge only extends to October 2023, yet some of the fabricated references, presented without any warning of their falsity, were supposedly from 2025.

This makes me reconsider my initial optimism when comparing DeepSeek-R1 with OpenAI-o1 in terms of algorithmic and energy efficiency. From what I have seen today, DeepSeek-R1 seems to have no qualms about generating fictitious data. It reminds me of that Groucho Marx line: “These are my principles. If you don’t like them… well I have others.”

Conclusions

DeepSeek-R1 represents a step forward in language models, standing out for its energy efficiency thanks to its hybrid architecture and reinforcement learning. However, like OpenAI-o1, it can generate false information—such as non-existent references that look entirely authentic. This underlines the importance of verifying AI-generated content before using it in any application. Although DeepSeek-R1’s gains in efficiency are noteworthy, accuracy remains a fundamental challenge.

Let us remember: the tool is valuable, but human judgement is irreplaceable.