Part I — The Mirage of Endless Progress

AI is now part of everyday life. Model names—GPT-3, GPT-4, GPT-5, Gemini, DeepSeek—show up in headlines next to feats that sounded like science fiction not long ago: instant translation, coherent long-form writing, solving maths problems, and images that look as if a human artist imagined them.

With every leap, an awkward question follows: does AI have a ceiling, or will progress carry on forever?

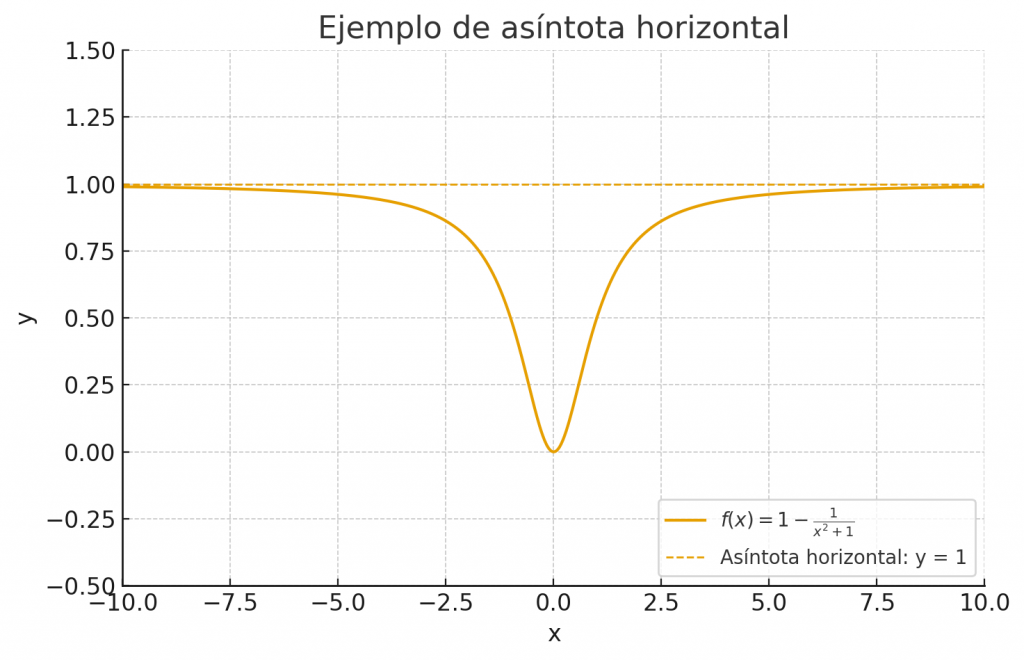

The asymptote metaphor

In maths, an asymptote is a line a function approaches but never quite reaches (see figure below). It’s a useful image for AI progress. The jump from GPT-3 to GPT-4 felt revolutionary; GPT-5, while better—more accurate, fewer failures, larger context—didn’t feel like the same kind of watershed. Early steps up a steep hill gain lots of altitude; further steps cost more and deliver less. That’s the asymptotic feel of progress.

The jump from GPT-3 to GPT-4 felt massive — from a clever but error-prone system to one that delivered results with real consistency. The move to GPT-5, while more accurate and capable, hasn’t felt like the same kind of revolution. It’s an upgrade, not a before-and-after moment.

Deterministic engines, not conscious minds

Today’s dominant systems are deterministic: given the same conditions, they produce the same output (or the same distribution over outputs). That reliability is a strength, but it also underlines a key absence: no consciousness, no intention, no subjective experience. What we define as AI algorithms are engines of instrumental intelligence—built to solve tasks efficiently—not minds that experience the world.

What do we mean by “intelligence”?

- If intelligence = problem-solving ability, then AI can keep improving; new techniques and paradigms will keep pushing the frontier.

- If intelligence = conscious experience (subjectivity, emotions, self-awareness), then current algorithms face a global limit: they may become superb problem-solvers, but not “intelligent” in the human sense.

Near-perfect here, useless there

A state-of-the-art translator can excel at English↔Spanish, yet fail at chess. A chess engine can crush grandmasters but “miss” the meaning of a poem. This isn’t a bug—it’s the natural consequence of task-specific optimisation. What’s brilliant in one domain can be irrelevant in another.

A wall—or a staircase?

AI doesn’t seem to be charging towards a single, unbreakable wall. It looks more like climbing a staircase: each step is a paradigm. At the start of a step, advances are fast and dazzling; later, improvements become small and expensive until the curve flattens—then a new step (a fresh paradigm) appears higher up.

Part II — Bayes Error, P vs NP, and the No Free Lunch (theoretical view)

In the first part of this post, we compared AI’s progress to climbing a staircase of asymptotic steps: each paradigm eventually levels off until a new one appears, opening the way to the next rise. To get a clearer picture of AI’s real limits, though, we need to pause and look at some core ideas from computer science and machine learning.

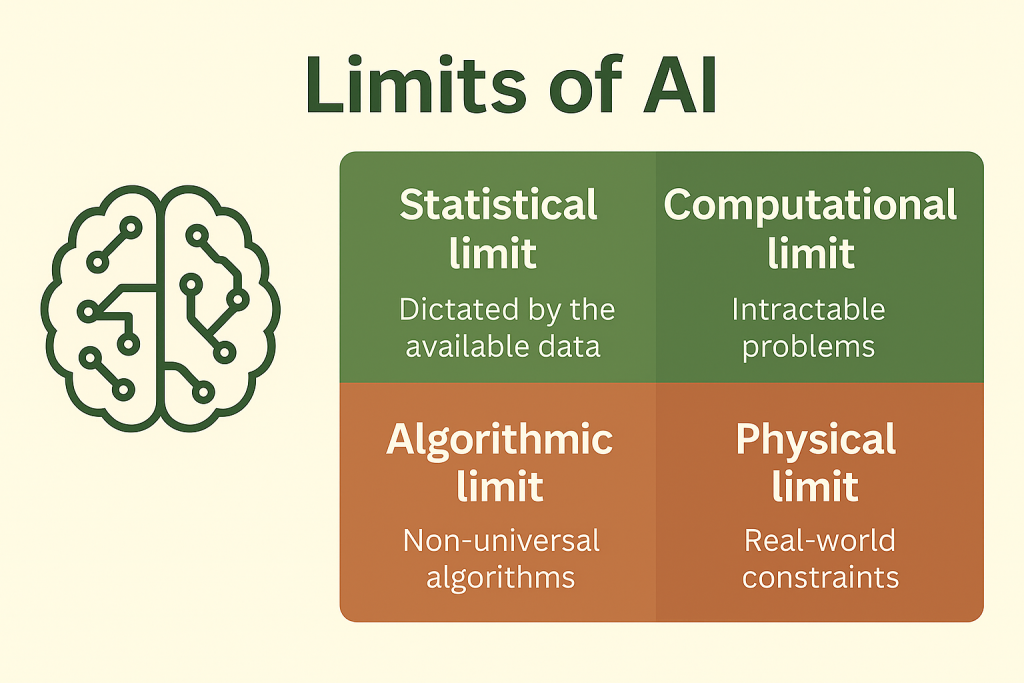

Bayes error: the statistical limit

Sometimes the data simply do not contain enough information to perfectly separate classes. Even the best possible classifier—with infinite capacity and flawless training—hits a floor: the Bayes error. It’s the minimum achievable error for a given data distribution. AI can approach this limit, but never beat it.

P vs NP: solvable quickly vs. checkable quickly

Another limit appears when we look at the nature of the problems we are trying to solve.

The class P groups together those problems that can be solved efficiently, that is, in a time that grows polynomially with the size of the input. For example, sorting a list of numbers is in P: even if the list has millions of elements, we can do it with very fast algorithms.

The class NP represents problems whose solutions are easy to verify, even though finding them may be extraordinarily costly. A classic example is the Travelling Salesperson Problem: visiting a set of cities, passing through each exactly once, at the lowest possible cost. If someone gives us a route, checking that it meets the conditions is quick; but finding it from scratch can be prohibitive.

The big question is whether P = NP.

- If they were equal, every problem whose solution can be verified could also be solved quickly.

- If they are different (as most experts believe), then there are problems that even the most advanced AI will never be able to solve efficiently.

In other words: no matter how powerful our algorithms become, some problems remain intractable by their very nature.

No Free Lunch: no universal best algorithm

A perfect hammer is still terrible at turning screws. The No Free Lunch theorems formalise this intuition: averaged over all possible problems, no algorithm outperforms all others. Every success relies on inductive bias—assumptions that match the structure of the task. There is no one model to rule them all.

Physical limits (and economic ones)

Even if we skirt the statistical and algorithmic limits, the real world steps in:

- Landauer’s principle imposes a thermodynamic cost on erasing information (a lower bound on energy per bit).

- Speed-of-light latency caps how quickly distributed systems can communicate.

- Training costs for frontier-scale costs models rise steeply—placing hard economic limits on scale.

Local limits vs global limits

Taken together, these constraints suggest clear—but local—limits that depend on paradigm, data, and resources.

If, however, we insist that intelligence requires consciousness, then today’s deterministic, non-subjective systems face a global asymptote they cannot cross.

Conclusion

AI isn’t racing towards a single brick wall. It’s navigating a landscape of moving frontiers: Bayes error bounds what the data can yield, P vs NP bounds what is computationally accessible, and No Free Lunch reminds us there’s no universal algorithm.

The path ahead depends on how we read those frontiers:

- as a reminder that instrumental intelligence can keep growing in power while remaining non-conscious, or

- as an invitation to rethink intelligence itself—and whether consciousness is essential.

“We cannot stop the future, but we can prepare for it.”

— Hari Seldon, Foundation