Over the last years, Artificial Intelligence has gone from being a promising technology to becoming the new “holy grail” of business innovation. Few companies want to be left behind by the wave and, in many boardrooms, the phrase keeps coming up: “we need an AI plan, now.”

The problem is that this drive is often fuelled more by FOMO (Fear of Missing Out) than by a real understanding of what AI can —and cannot— deliver. The result? Projects rushed out the door, inflated expectations, and returns on investment that rarely match the hype.

The mirage of unlimited promise

These days we see that the discourse around AI tends towards exaggeration: headlines and opinions portraying it as a limitless technology, capable of solving any problem and, from the outset, compared to human intelligence. Many executives, with little familiarity with the technical nuances, appear to be swept along by the trend of prematurely implementing AI in their business models —which is leading to unexpected results.

And it is not just perception. Several recent studies confirm this:

- According to an MIT analysis cited by Axios, 95% of surveyed organisations failed to achieve returns on their AI investments (Axios, 2025).

- Meanwhile, McKinsey showed in its State of AI study that, although the use of generative AI has spread, most companies still do not perceive a significant impact on their operating profits (McKinsey, 2024).

As we already saw in the post Europe’s AI dilemma – innovate or regulate?, this is not the first time society has faced this problem. In the case of regulation, we saw how Plato and Aristotle, in their time, had studied the relationship between regulatory systems and freedoms. Now we will see that this is not the first time companies have faced that same “fear of missing out” on technological developments in a misguided way.

The Gartner hype cycle

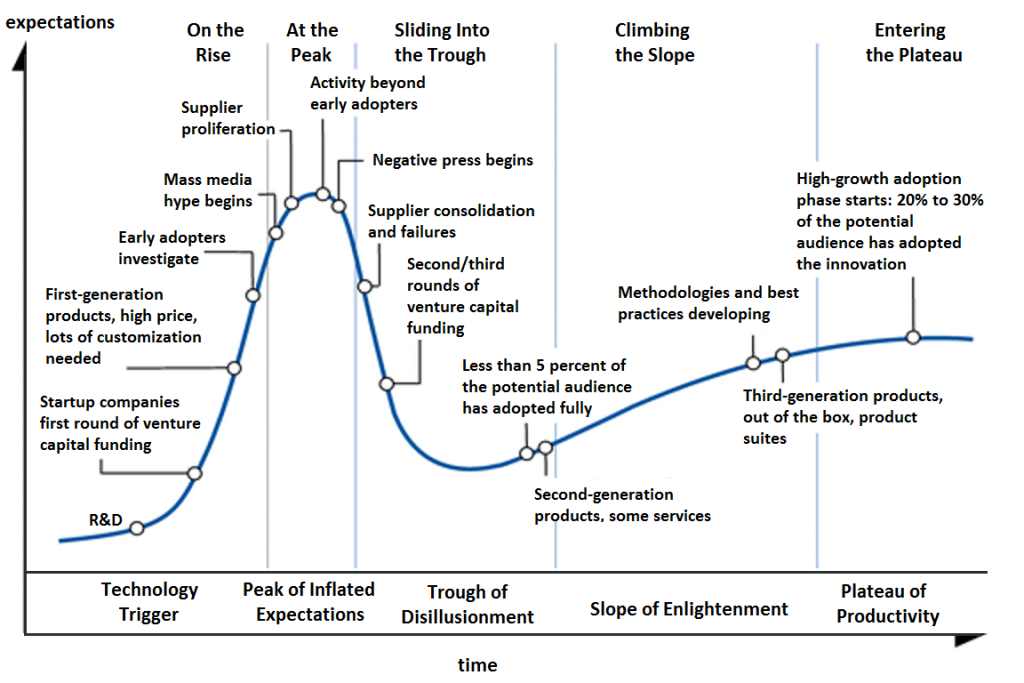

The current rush to deploy artificial intelligence, as seen in previous posts, can be understood in light of Gartner’s Hype Cycle —a model that describes how expectations around emerging technologies evolve: from the initial enthusiasm and the peak of inflated expectations, through the inevitable trough of disillusionment, to the stage of productive maturity (figure 1). This framework was introduced by the consultancy Gartner in the 1990s and systematised in the book Mastering the Hype Cycle: How to Choose the Right Innovation at the Right Time (Fenn & Raskino, Harvard Business Press, 2008).

In the case of AI, many organisations today seem to be at that critical point between unbridled enthusiasm and the pressing need to find sustainable applications that generate real returns.

We have seen this before

Curiously, Gartner’s Hype Cycle began to be used in the early 1990s as a way of systematising something that technology analysts had already been observing: many emerging technologies followed a repetitive pattern of exaggerated enthusiasm, followed by disappointment and, in some cases, consolidation.

Among the technologies that served as an initial reference for building and validating the model were:

- First-wave Artificial Intelligence (AI): in the 1980s there was a boom in expert systems, followed by the so-called AI Winter when expectations were not met.

- Virtual reality: heavily publicised in the late 1980s and early 1990s, with large investments in hardware and entertainment, but without achieving widespread practical applications at that time.

- Distributed / grid computing: anticipated “cloud computing”, but in the 1990s it faced enormous technical and interoperability challenges.

- E-business and commercial internet: in the mid-1990s, the dot-com bubble was a perfect example of inflated expectations followed by a trough of disillusionment before the web consolidated as productive infrastructure.

Looking at the results described in the publications mentioned earlier, we could say that at this point we are surpassing —if we have not already surpassed— the highest peak of expectation, and we are beginning to see the press highlight negative results associated with the technology under study, in this case AI.

Why do business expectations drop so sharply in Gartner’s model?

According to Gartner, the abrupt fall into the “Trough of Disillusionment” occurs because:

- Expectations inflated by marketing and media: in the initial phase, consultancies, start-ups, and specialist press exaggerate what the technology can do immediately. In this case, exaggeration is not limited to specialist media; it also includes general media, which have undoubtedly contributed to generating an all-powerful image of AI.

- Underestimation of technical barriers: the real complexity of implementing robust solutions is only discovered during the implementation phase. At this point, businesses might benefit from a strategy widely used in science: the pilot study.

- Limited returns in initial projects: pilot studies and proofs of concept rarely live up to their commercial promises.

- Shortage of talent and ecosystem: a lack of standards, trained professionals, and clear use cases generates frustration. It is somewhat naïve to try to implement AI-related strategies in an existing business model without the talent and infrastructure necessary to make it work.

- Investment fatigue: after a period of enthusiasm, investors and executives perceive that the benefits do not justify the cost and pull back.

All this creates a collective perception that “the technology does not work”, although in reality this perception is caused by the misalignment between expectations and actual maturity.

In summary: the “trough” is not inevitable in itself, but rather the result of a clash between inflated narratives and technical realities. From past experiences we can learn that the key lies in aligning expectations, progressing with realistic use cases, and maintaining transparency about limitations.

The reality is more sober. AI does have enormous potential, yes, but it also comes with practical limitations: it requires quality data, costly infrastructures, specialised talent, proper training and, above all, a clear strategy for integration into the business.

Conclusion

AI isn’t a magic ticket to instant success. It’s a powerful tool — and when used with purpose, it can reshape entire industries. But rushing in just because everyone else is doing it is usually a shortcut to disappointment.

The real challenge isn’t to wave around an “AI plan” at the next board meeting. It’s to pinpoint real problems where AI can genuinely add value. That means asking the tough questions: do we have the right data, the right infrastructure, the right people and a culture ready to make it work?

So the key question isn’t “do we have AI?” but “do we have a problem that AI is the best answer to?” Sometimes it’s smarter to pause, reflect, and adopt with intent. Better to be late with a purpose than early with empty promises. In the race for innovation, strategy and focus usually win over blind speed.

“Aimless extension of knowledge, however, which is what I think you really mean by the term curiosity, is merely inefficiency. I am designed to avoid inefficiency.” — R. Daneel Olivaw