Over the past few years, Generative AI has transformed content creation through its capacity to produce text, images, and code on demand. Tools such as ChatGPT and Midjourney have demonstrated how effectively large models can generate outputs when prompted with precision. However, despite their impressive results, these systems remain fundamentally reactive: each output depends entirely on external input, and no internal mechanism exists to plan, verify, or adapt beyond that scope.

Agentic AI represents the next stage in this technological evolution. Rather than producing isolated responses, agentic systems are designed to execute structured workflows that include planning, verification, and adaptation. They transform static generation into a process-driven framework, where each operation follows predefined logic and decision points.

In this entry, we explore the potential of Agentic AI through a concrete example: the development of an agent that automatically reviews recent news, identifies the most relevant AI-related stories, and prepares a fact-checked post suitable for publication on LinkedIn. The project’s code is available in GitHub.

This project serves as a hands-on demonstration of how frameworks like LangGraph can orchestrate multi-step workflows that integrate memory, verification, and decision logic — key elements that distinguish Agentic AI from traditional generative systems.

The problem: Why Generative AI falls short?

Generative AI has proven transformative, but it still exhibits structural limitations that constrain its operational autonomy.

- No self-verification: A generative model can produce fluent and convincing text, but it lacks internal mechanisms to assess factual accuracy or validate claims against source material. As discussed in previous entries, this can result in incorrect or misleading information — a phenomenon commonly referred to as hallucination in the AI field.

- No memory: Each interaction is processed in isolation. Context is not retained between exchanges and must be reintroduced by the user or application at every step.

- No decision logic: Generative systems do not manage multi-step reasoning, task prioritisation, or adaptive sequencing within a single workflow.

- No orchestration: They cannot independently coordinate multiple tools, APIs, or data sources to complete complex operations.

Consequently, even the most advanced language models function as highly capable but context-limited systems: they can produce coherent outputs, yet they cannot execute an entire process from initiation to completion. Without persistent memory, explicit goals, or verification mechanisms, Generative AI remains a static generation engine — powerful in expression, but limited in procedural depth.

What makes AI “Agentic”?

Agentic AI addresses these limitations by introducing structured agency — the capacity to execute predefined objectives through coordinated, feedback-driven processes. Instead of producing isolated responses, an agentic system operates through a goal-oriented loop consisting of observation, planning, execution, verification, and adaptation. Each of these stages follows explicit logic established by the developer. This behaviour does not arise spontaneously; it results from deliberate system design, configuration, and fine-tuning to ensure that the workflow performs its intended function with consistency and reliability.

This transformation relies on several key capabilities:

- Autonomous decision logic: the system executes predefined decision rules or conditional branches that determine subsequent operations based on intermediate results.

- Output evaluation: processes are implemented to assess generated results against predefined criteria or verification mechanisms.

- Feedback-based optimisation: iterative refinement mechanisms adjust parameters or strategies according to performance metrics or error signals.

- Goal-directed execution: the workflow is maintained through task-level objectives that guide sequencing, prioritisation, and adaptation to contextual variables.

- Tool orchestration: coordinated use of external APIs, data sources, and computational modules to complete multi-stage operations.

- State and memory management: structured data persistence that stores contextual information across steps, supporting continuity and cumulative context handling.

Together, these properties define Agentic AI as a structured computational framework — an interconnected ecosystem of processes capable of coordinating complex workflows, incorporating feedback for iterative optimisation, and interoperating effectively with human decision-making environments.

Demonstration project: The LinkedIn News Agent

To make these ideas tangible, R. Daneel Olivaw and I, developed a project called the LinkedIn News Agent, built on top of the LangGraph framework. This project illustrates how Agentic AI goes far beyond simple text generation by orchestrating an entire workflow — from information discovery to publication — in an automated, verifiable pipeline.

The project is organised as a miniature editorial workflow composed entirely of AI agents, each responsible for a specific role but all working together towards a common goal: producing professional, fact-checked, and engaging LinkedIn posts about the latest AI news.

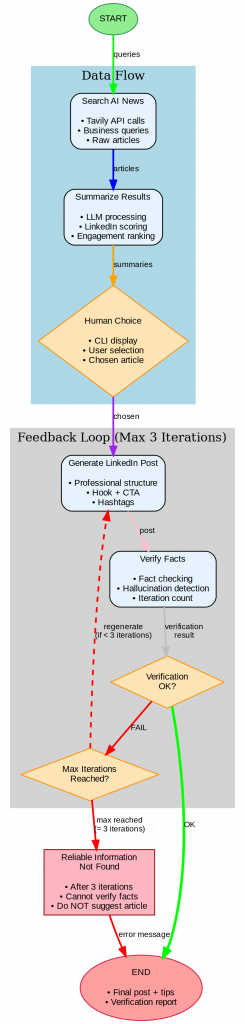

Phase 1: Data flow

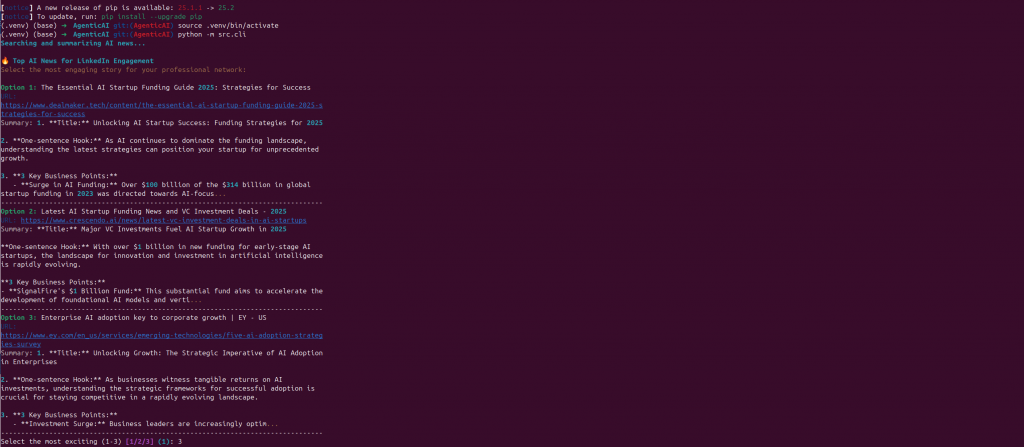

The workflow begins with the Search AI News component, where the system queries the Tavily API using a series of predefined business-oriented prompts such as “AI startup funding” or “AI job market trends.” This step retrieves a set of raw articles relevant to recent AI activity in industry and research.

Next, the Summarise Results module processes these articles using an LLM to extract the most relevant insights and generate LinkedIn-ready summaries. Each summary is scored for professional relevance and engagement potential, ensuring that the most informative and discussion-worthy items are prioritised.

Finally, a Human Choice step introduces a human-in-the-loop component. The system presents the top-ranked summaries in a command-line interface, allowing the user to select the most suitable article for publication. This design ensures editorial oversight while maintaining automation efficiency.

Phase 2: Feedback loop (Max 3 Iterations)

Once an article is selected, the workflow transitions into the Feedback Loop, where the agent generates, verifies, and refines the final LinkedIn post.

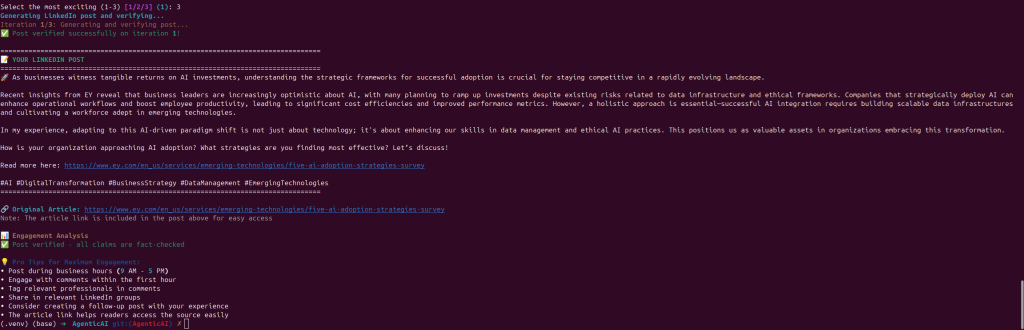

The Generate LinkedIn Post module structures the content using best practices for LinkedIn engagement — adding a clear hook, a concise insight, and an effective call-to-action (CTA). The post is then passed to the Verify Facts component, which cross-checks all claims against the original source to detect inaccuracies or hallucinations.

If the verification fails, the system automatically triggers a regeneration step, incorporating the feedback to correct factual inconsistencies. This loop can repeat up to three iterations, progressively improving the post.

If after three attempts reliable information still cannot be confirmed, the process concludes with a “Reliable Information Not Found” status, preventing the publication of unverified content. Otherwise, once the post passes verification, the system generates the final output — a polished LinkedIn post accompanied by a verification report for transparency.

Each of these components operates within a shared memory and state, coordinated by LangGraph’s workflow engine. Together, in my opinion, ogether, they provide a concrete example of what “agentic” really means: a system capable of following previously defined steps defined by the developer (in this case, the author), to make sure the Agent generates the expected results.

Before exploring the technical framework, it’s worth analysing what makes this feedback loop so central to the system’s reliability.

The heart of Agentic AI: The verification feedback loop

The most significant innovation in the LinkedIn News Agent, and the clearest example of Agentic AI’s capabilities, is the verification feedback loop. This mechanism allows the system to identify discrepancies, apply corrective procedures, and refine its outputs through structured iteration.

Process overview:

- Initial generation – The system generates a LinkedIn post using the selected article as input data, producing a structured text that highlights key insights and contextual information.

- Fact verification – A verification module compares each statement in the draft against the source material. Any unverified or inconsistent claims are recorded and categorised for correction.

- Decision point – The workflow evaluates the verification results against predefined quality and accuracy thresholds to determine whether further processing is required.

- Regeneration – If the verification step fails, the system re-executes the generation process, incorporating the feedback data to adjust the content accordingly.

- Iteration limit – After a maximum of three verification–regeneration cycles, the system either outputs a verified post or produces a diagnostic report summarising unresolved issues.

This iterative correction loop represents a shift from static text generation to stateful, feedback-driven computation. Rather than producing a single output, the workflow uses verification results to dynamically control execution paths until a stability condition or termination criterion is reached.

In operational terms, this design enhances reliability and traceability: each output is verifiably grounded in source data, and every processing step is logged and auditable. The outcome is not merely automated content creation, but a reproducible and transparent system for generating validated information with measurable quality controls.

Results

Figure 2 shows a terminal window displaying the execution of the LinkedIn News Agent project. The program searches for and summarises recent AI-related news, presenting three article options optimised for LinkedIn engagement. Each option includes the article title, URL, a one-sentence hook, and three key business points. At the end, the user is prompted to select the most relevant article for further processing by entering a number between 1 and 3.

Figure 3 displays the command-line output of the LinkedIn News Agent after generating and verifying a potential LinkedIn post. The system successfully completes the first verification iteration, showing the fully formatted post, including the main text, article link, hashtags, and engagement tips. Verification and engagement analysis confirm that all claims in the post are fact-checked.

The LinkedIn News Agent successfully demonstrates the capabilities of an Agentic AI system by autonomously completing a full content generation workflow — from information retrieval to verified publication. As shown in Figure 4, the agent produces a polished, fact-checked LinkedIn post that includes a concise hook, structured insights, and relevant hashtags, all derived from validated source material. The final output maintains a professional tone and clear logical flow, illustrating the agent’s ability to integrate search, summarisation, verification, and composition modules within a coordinated process. This outcome highlights not only the system’s capacity for reliable automation but also the potential of agentic architectures to support transparent, high-quality information dissemination in professional contexts.

LangGraph: The technical backbone

The LinkedIn News Agent is built on LangGraph, a framework that extends the prompt-chaining concept introduced by LangChain. LangGraph enables the creation of complete, stateful workflows where multiple components — such as information retrieval, summarisation, verification, and text generation — operate in a coordinated process.

Acting as an orchestration layer, LangGraph manages execution order, data exchange, and conditional branching. The workflow is defined through a build_workflow function that sequences each stage: retrieve AI news → summarise key items → pause for human selection → generate and verify the LinkedIn post. The human-in-the-loop step ensures editorial oversight within an automated pipeline.

A key strength of LangGraph lies in its ability to preserve state and context across executions. Rather than restarting at each prompt, it maintains queries, summaries, verification results, and iteration counts — ensuring continuity and reproducibility. Conditional routing allows the process to follow different paths based on verification outcomes, encoded as explicit rules within the workflow graph.

In summary, LangGraph provides the infrastructure for building deterministic, auditable, and feedback-driven AI workflows, transforming large language models into reliable components within controlled computational systems.

Conclusion

Agentic AI marks a methodological shift from stateless text generation (where each response is produced independently) to stateful, feedback-controlled computation. By integrating memory, conditional logic, and verification cycles, frameworks like LangGraph show how multi-step workflows can operate with consistency, traceability, and reproducibility.

Unlike conventional generative models, agentic systems preserve context between operations, assess intermediate results, and adjust subsequent steps according to predefined criteria — enabling deterministic behaviour and explicit quality control.

Ultimately, Agentic AI represents a computational paradigm focused on control, verification, and context retention. Its potential lies not in scaling model size, but in developing architectures that ensure transparency, reliability, and auditability across borh research and enterprise contexts.

“The evolution of AI does not depend on larger models, but on architectures capable of preserving state, verifying results, and iterating with precision.”